Hey! I’m back to report about my third week of my Senior Experience project. My primary objective for this week was to implement a positioning algorithm for my swarm drones.

Let me back up and explain what those big words I just used meant.

The drones in my robot swarm are very simple. They can do two things: drive (forwards, backwards, and right/left steering) and connect to the Internet. The drones have no on-board sensors, so there is no way for them to know where they are. They cannot sense if they are about to bump into each other or even detect if they are about to drive off a table. If you have been keeping up with my blog, you’ll know that I have also been building an Internet-connected camera that can track the locations of the swarm drones based on their colored hats. Last week, I planned a system for the camera to send the location of the swarm drones to the drones themselves. The last step is to program the drones to be able to to use the location data from the camera module to navigate around a space and drive to a target

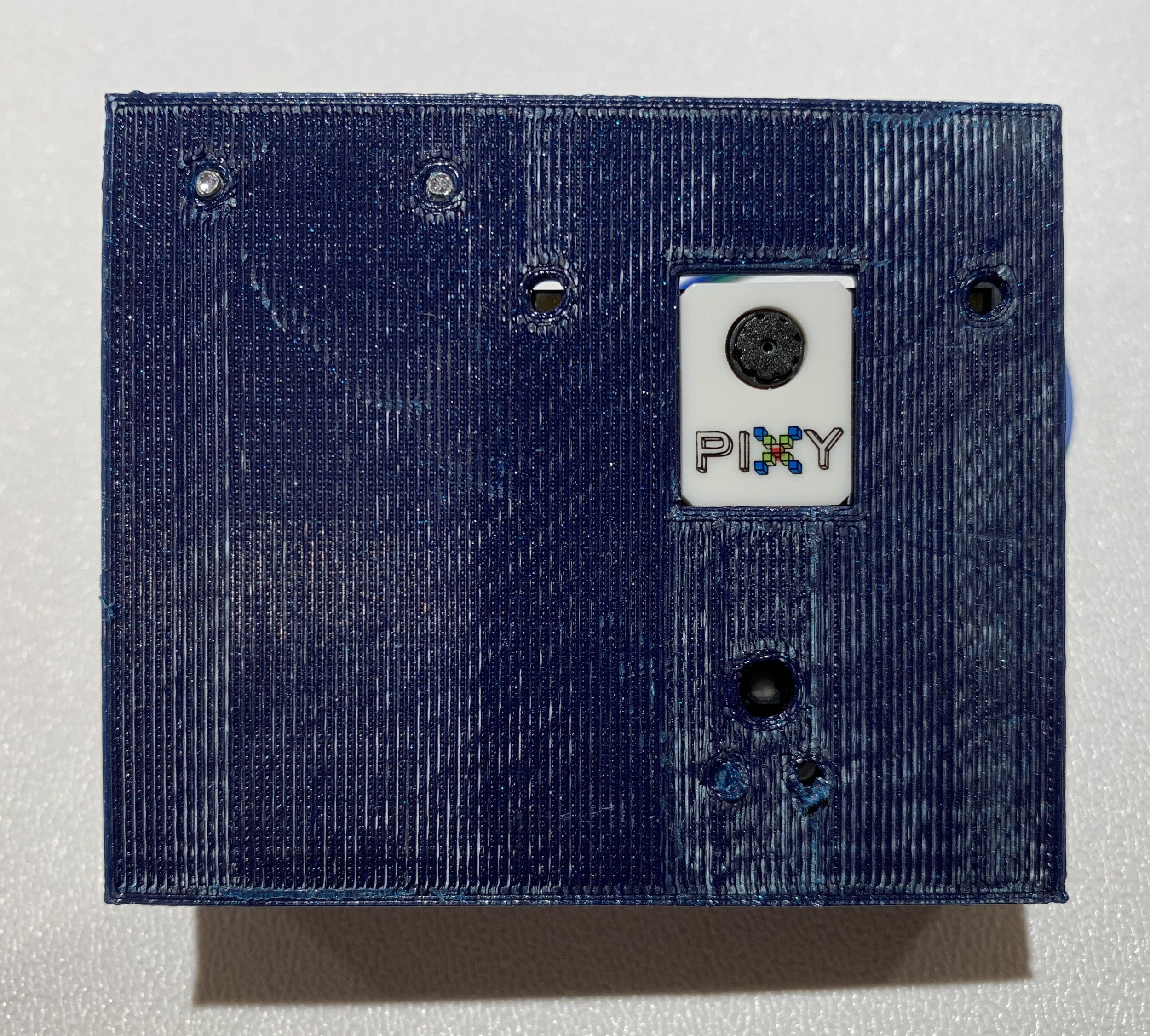

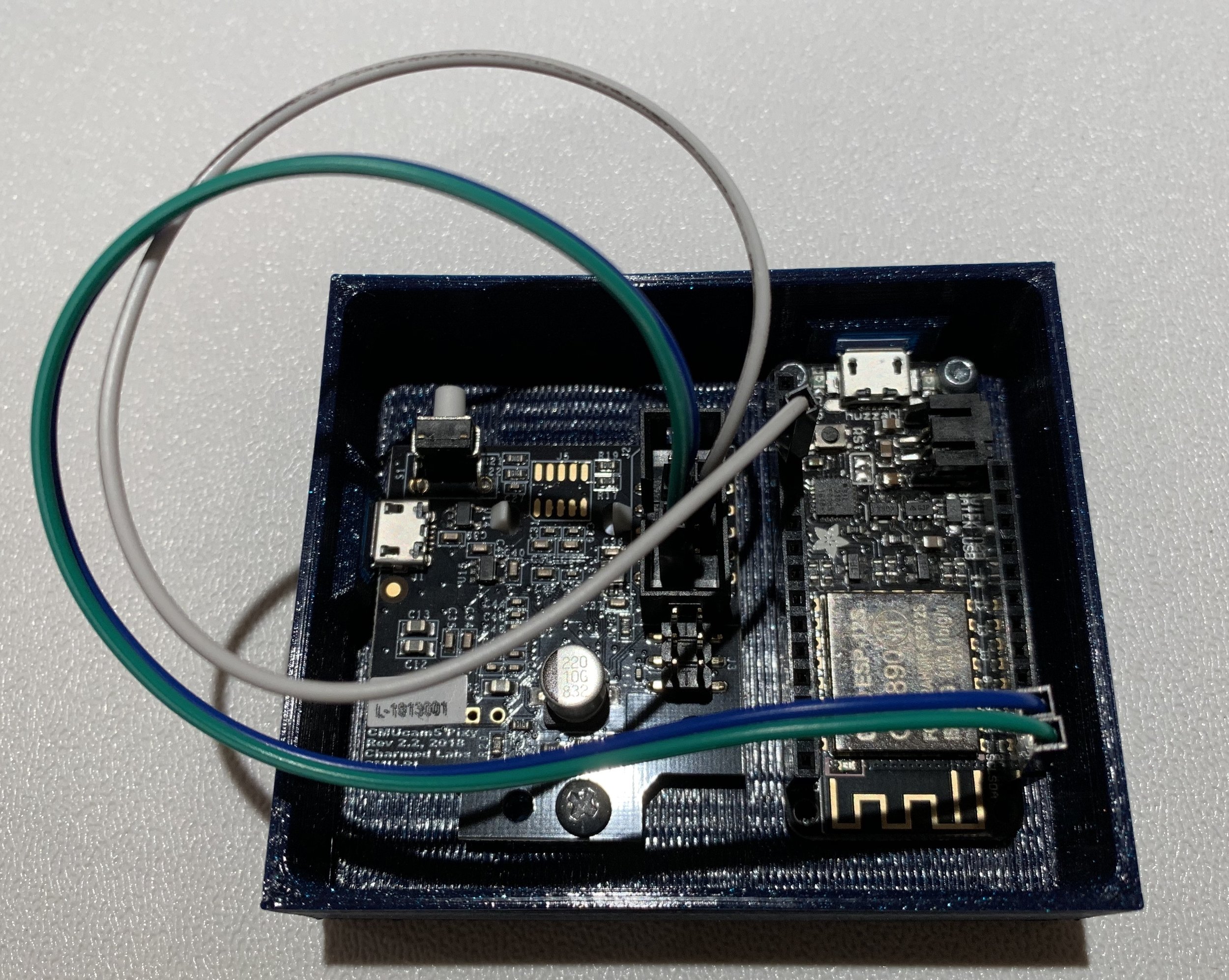

I started by assembling the camera module. The module consists of a Feather Huzzah WiFi board and the PixyCam camera that tracks the robots. I built a 3D printed case for these components that then attaches to a tripod to hold it above the table that the drones will live on.

Next, I had to write some code to make the camera module output the location data of the swarm drones in a way that the drones can then understand. Here is how the code works:

The WiFi board in the camera module asks the PixyCam for the coordinates of every drone that it sees as well as their color

The WiFi board hosts a webserver where it displays a string that has the color of the drones it sees and their XY coordinates

Every time a drone requests data from the webserver, the module will recheck what the camera sees and will update locations

So, to recap, the camera module tracks the location of each drone, and then each drone connects to the webserver to find its location. Here’s the tricky part: now that the drones know their current location, how can they navigate to another location. This is where the position algorithm comes into play. The algorithm works as follows:

The drone reads its current location from the webserver

The drone drives with both wheels turning at the same speed for a short time interval

The drone reads its location again

The drone compares the two locations and determines how far it has moved and in what direction

The drone compares its current location its target location and determines the distance and direction to this point

It compares the two vectors and finds the angle between them

If the objective vector is to the right of position vector, the robot speeds up its left wheel, and vice versa

Repeat until the robot approximately reaches the target

Here is a picture to aid my explanation: